Introduction

Kubernetes is a tool used to orchestrate and manage containerized applications. Split provides three containerized applications that can be run on your own infrastructure. These apps can handle specific use cases for feature flagging and experimentation with our SDKs and APIs.

All three Kubernetes related examples have a NodePort service to allow communication outside the cluster for the app. Once you have this up and running, if you are using minikube you can get the NodePort URL by calling:

minikube service pyapp-svc -urlThen enter the returned URL in a browser, and you will see a page showing the pod name and the split treatment. It’s time to embrace Kubernetes and Split.

The Evaluator

Use Case

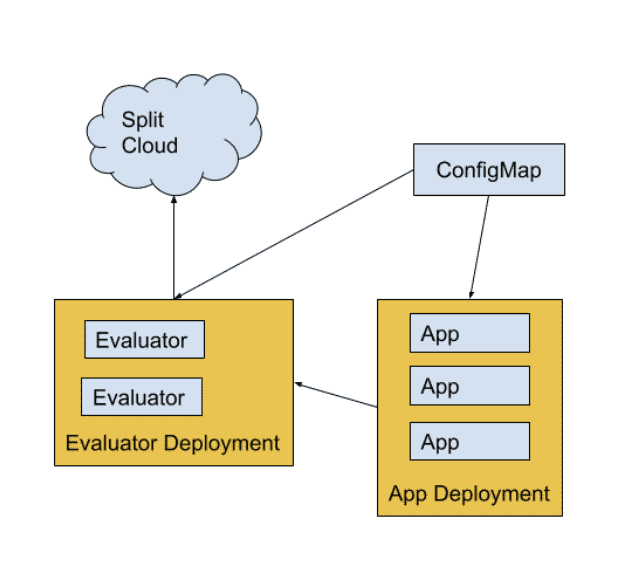

Use the split evaluator when there is no Split SDK for the language you have in your application backend. The evaluator makes HTTP APIs available. The APIs exposed by the evaluator have most of the SDK functionality. You can use it to get treatments with dynamic config, and track events. See here for more information on individual commands and usage to deploy the split evaluator.

Sizing and Scaling

Internal benchmarks on an AWS c5.2xlarge EC2 instance have shown that an evaluator instance running on a single thread can process 1,200 transactions per second. A transaction can retrieve multiple split treatments if using the getTreatments endpoint. In this specific benchmark, the evaluator was serving two treatments, so this was 2,400 treatments per second.

The evaluator is stateless. It can be scaled in and out as needed by spinning up a new instance and routing requests to it. The general recommendation is to spin up a new instance at 50% CPU or 50 percent memory usage.

Health Check

The evaluator has a health check endpoint that can be used to determine if the evaluator is healthy: /admin/healthcheck.

Sample Usage

Here are sample YAML files for creating an evaluator. This includes using a ConfigMap to store the SDK key used as well as health checks and readiness checks. The split-name in the config map allows you to use your Split organization for testing and bringing up this application.

YAML Files

ConfigMap

# ConfigMap

apiVersion: v1

data:

api-key: <insert your api key here>

split-name: <insert your split name here>

kind: ConfigMap

metadata:

name: eval-configmapEvaluator Service

# Evaluator Service

apiVersion: v1

kind: Service

metadata:

labels:

app: evaluator-deployment

name: evaluator-svc

spec:

ports:

- name: 7548-7548

port: 7548

protocol: TCP

targetPort: 7548

selector:

app: evaluator-deploymentEvaluator Deployment

# Evaluator Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: evaluator-deployment

name: evaluator-deployment

spec:

replicas: 2

selector:

matchLabels:

app: evaluator-deployment

template:

metadata:

labels:

app: evaluator-deployment

spec:

containers:

- image: splitsoftware/split-evaluator

name: split-evaluator

startupProbe:

httpGet:

path: /admin/healthcheck

port: 7548

initialDelaySeconds: 1

timeoutSeconds: 1

livenessProbe:

httpGet:

path: /admin/healthcheck

port: 7548

initialDelaySeconds: 1

periodSeconds: 5

env:

- name: SPLIT_EVALUATOR_API_KEY

valueFrom:

configMapKeyRef:

name: eval-configmap

key: api-keyApp NodePort Service

# App NodePort Service

apiVersion: v1

kind: Service

metadata:

labels:

app: pyapp-svc

name: pyapp-svc

spec:

ports:

- name: "5000"

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: pyapp-demo

type: NodePortApp Deployment

# App Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: pyapp-demo

name: pyapp-demo

spec:

replicas: 3

selector:

matchLabels:

app: pyapp-demo

template:

metadata:

labels:

app: pyapp-demo

spec:

containers:

- image: kleinjoshuaa/py-split-demo-evaluator

name: evaluator-demo

env:

- name: SPLIT_NAME

valueFrom:

configMapKeyRef:

name: eval-configmap

key: split-name

- name: EVALUATOR_URL

value: "http://evaluator-svc:7548"

- name: POD_ID

valueFrom:

fieldRef:

fieldPath: metadata.nameThe Proxy

Use Case

There are two main scenarios where the Split Proxy is useful. The first is when using a client-side SDK as a workaround to adblockers. Using the Split Proxy will allow the SDK to connect to URL endpoints within your domain. As a result, an ad-blocker won’t see the connection to Split’s servers that the Proxy is doing on your backend.

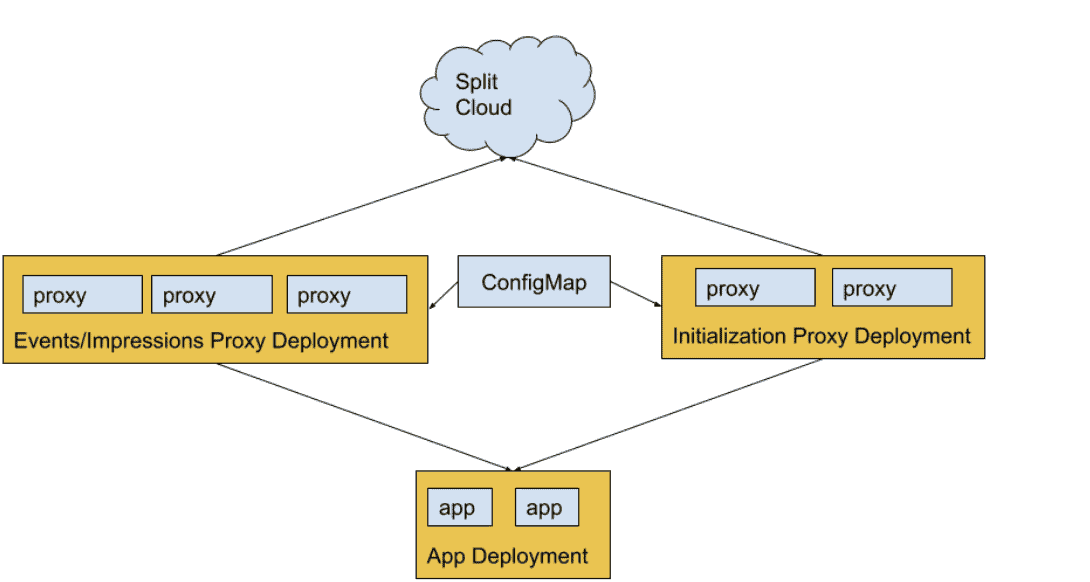

The second use case for the Proxy is with server-side SDKs: in a microservices environment or otherwise where multiple Split SDKs are used in multiple different services. The Split Proxy can be used so that those SDKs communicate across your internal network infrastructure. This is better than having to establish a connection to the Split Cloud on their own. As a result, this can speed up SDK initialization time significantly. In addition, this allows for monitoring Split Proxy as a single point of contact with Split Cloud.

This second case is what we will be using for the example here. For the first use case, one could expose the proxies directly to the internet rather than only to the app within the cluster. For more information see the Split Proxy page in Split’s help center.

Sizing and Scaling

To ensure SDKs have no issues initializing even when facing high demand, you could consider having separate Proxy instances. They can handle split and segments, events and impressions. Since events and impressions are more memory-intensive tasks for the Proxy, serving a high amount of these requests may affect the responsiveness of the more performant splits and segments requests—which are the ones involved in the SDKs’ initialization. Having separate instances of the Proxy—one for impressions and one for events—can significantly reduce latency and allow for fine tuning based upon events and impressions traffic you have.

Additionally, since split and segment requests are less demanding, having dedicated instances for initialization will likely allow you to serve more SDKs with fewer instances.

For reference, these are the sizing recommendations from our internal testing. A Proxy serving 1M JS SDK clients with default polling rates for it to stabilize at ~50 percent CPU and lower RAM usage:

- 1 instance with 32 cores, 32GB memory, 10 Gigabit network

- 4 instances with 8 cores, 8GB memory, 10 Gigabit network

Health Check

`The URL for the healthcheck is /health/application. For the sake of this sample application, we are using a curl command on the pod itself to do the healthcheck, since Kubernetes does not have native JSON parsing capability.

Sample Usage

Here are sample YAML files for creating a Proxy. This includes using a ConfigMap to store the SDK key used and health and readiness checks. This has a NodePort service to allow the app to communicate outside the cluster. We have separate deployments for a Proxy for the events.split.io events and impressions endpoint, and one for sdk.split.io. The split-name in the ConfigMap allows you to use your own Split organization for testing and bringing up this application.

YAML Files

ConfigMap

# ConfigMap

apiVersion: v1

data:

api-key: <insert the SDK key here to connect to split cloud>

client-api-key: <insert the 'client' SDK key for your SDK clients to use>

split-name: <insert your split name here>

kind: ConfigMap

metadata:

name: proxy-configmapEvents Proxy Deployment

# Events Proxy Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: proxy-events-deployment

name: proxy-events-deployment

spec:

replicas: 3

selector:

matchLabels:

app: proxy-events-deployment

template:

metadata:

labels:

app: proxy-events-deployment

spec:

containers:

- image: splitsoftware/split-proxy

name: split-proxy

startupProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 3

timeoutSeconds: 1

livenessProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 1

periodSeconds: 5

env:

- name: SPLIT_PROXY_APIKEY

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: api-key

- name: SPLIT_PROXY_CLIENT_APIKEYS

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: client-api-keyEvents Proxy Service

# Events Proxy Service

apiVersion: v1

kind: Service

metadata:

labels:

app: proxy-events-svc

name: proxy-events-svc

spec:

ports:

- name: proxy

port: 3000

protocol: TCP

targetPort: 3000

- name: admin

port: 3010

protocol: TCP

targetPort: 3010

selector:

app: proxy-events-deploymentSDK Proxy Deployment

# SDK Proxy Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: proxy-sdk-deployment

name: proxy-sdk-deployment

spec:

replicas: 2

selector:

matchLabels:

app: proxy-sdk-deployment

template:

metadata:

labels:

app: proxy-sdk-deployment

spec:

containers:

- image: splitsoftware/split-proxy

name: split-proxy

startupProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 3

timeoutSeconds: 1

livenessProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 1

periodSeconds: 5

env:

- name: SPLIT_PROXY_APIKEY

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: api-key

- name: SPLIT_PROXY_CLIENT_APIKEYS

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: client-api-keySDK Proxy Service

# SDK Proxy Service

apiVersion: v1

kind: Service

metadata:

labels:

app: proxy-sdk-svc

name: proxy-sdk-svc

spec:

ports:

- name: proxy

port: 3000

protocol: TCP

targetPort: 3000

- name: admin

port: 3010

protocol: TCP

targetPort: 3010

selector:

app: proxy-sdk-deploymentApp Deployment

# App Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: pyapp-demo

name: pyapp-demo

spec:

replicas: 2

selector:

matchLabels:

app: pyapp-demo

template:

metadata:

labels:

app: pyapp-demo

spec:

containers:

- image: kleinjoshuaa/py-split-demo-proxy

name: proxy-demo

env:

- name: SPLIT_NAME

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: split-name

- name: API_KEY

valueFrom:

configMapKeyRef:

name: proxy-configmap

key: client-api-key

- name: EVENTS_PROXY_URL

value: "http://proxy-events-svc:3000/api"

- name: SDK_PROXY_URL

value: "http://proxy-sdk-svc:3000/api"

- name: POD_ID

valueFrom:

fieldRef:

fieldPath: metadata.name

App Service

# App Service

apiVersion: v1

kind: Service

metadata:

labels:

app: pyapp-svc

name: pyapp-svc

spec:

ports:

- name: "5000"

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: pyapp-demo

type: NodePortThe Synchronizer

Use Case

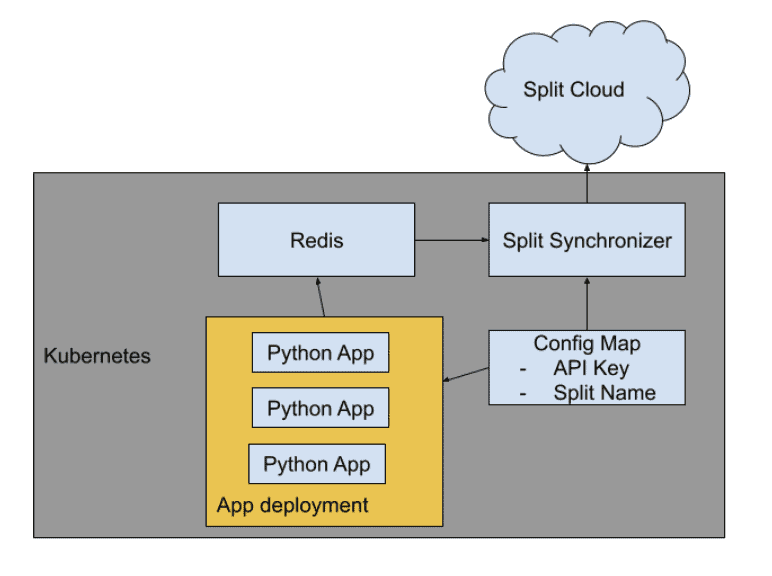

The Split Synchronizer coordinates the sending and receiving of data to a remote datastore (Redis). All of your processes can share to this datastore for the evaluation of treatments, acting as the cache for your SDKs. The Synchronizer, Redis, and your app can all be orchestrated in a Kubernetes cluster. We will show a basic example of this.

Sizing and Scaling

The Synchronizer is multithreaded and can handle production-level traffic by increasing the number of threads posting events and impressions. For more information on sizing Redis and the Synchronizer for production-level workloads, review our Synchronizer Runbook.

Health Check

The URL for the healthcheck is /health/application. For the sake of this sample application, we are using a curl command on the pod itself to do the healthcheck, as Kubernetes does not have native JSON parsing capability.

Sample Usage

Here are sample YAML files for creating the demo app, as well as Synchronizer and Redis. This includes using a ConfigMap to store the SDK key used and health and readiness checks. This has a NodePort service to allow the app using the SDK to communicate outside the cluster. The split-name in the config map allows you to use your own Split organization for testing and bringing up this application.

Architecture Diagram

YAML Files

ConfigMap

# ConfigMap

apiVersion: v1

data:

api-key: <insert your SDK Key here>

split-name: <insert your split name here>

kind: ConfigMap

metadata:

name: app-configmapApp Deployment

# App Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: pyapp

name: pyapp

spec:

replicas: 3

selector:

matchLabels:

app: pyapp

template:

metadata:

labels:

app: pyapp

spec:

containers:

- image: kleinjoshuaa/py-split-demo

name: split-demo

startupProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 3

timeoutSeconds: 1

livenessProbe:

exec:

command:

- /bin/bash

- -c

- health=`curl -s localhost:3010/health/application | grep '"healthy":true' -o | wc -l`; if test $health -ne 3; then exit 0; else exit 1; fi

initialDelaySeconds: 1

periodSeconds: 5

env:

- name: API_KEY

valueFrom:

configMapKeyRef:

name: app-configmap

key: api-key

- name: SPLIT_NAME

valueFrom:

configMapKeyRef:

name: app-configmap

key: split-name

- name: USE_REDIS

value: "True"

- name: REDIS_HOST

value: "redis-svc"

- name: REDIS_PORT

value: "6379"

- name: POD_ID

valueFrom:

fieldRef:

fieldPath: metadata.name

status: {}App Service

# App Service

apiVersion: v1

kind: Service

metadata:

labels:

app: pyapp-svc

name: pyapp-svc

spec:

ports:

- name: "5000"

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: pyapp

type: NodePortRedis Pod

# Redis Pod

apiVersion: v1

kind: Pod

metadata:

name: redis

labels:

app: redis

spec:

containers:

- name: redis

image: redisRedis Service

# Redis Service

apiVersion: v1

kind: Service

metadata:

name: redis-svc

spec:

selector:

app: redis

ports:

- port: 6379

targetPort: 6379Synchronizer Pod

# Synchronizer Pod

apiVersion: v1

kind: Pod

metadata:

name: split-sync

labels:

app: split-sync

spec:

containers:

- name: split-sync

image: splitsoftware/split-synchronizer

env:

- name: SPLIT_SYNC_APIKEY

valueFrom:

configMapKeyRef:

name: app-configmap

key: api-key

- name: SPLIT_SYNC_REDIS_HOST

value: redis-svc

- name: SPLIT_SYNC_REDIS_PORT

value: "6379"ConfigMap:

apiVersion: v1

data:

api-key: <insert your SDK Key here>

split-name: <insert your split name here>

kind: ConfigMap

metadata:

name: app-configmapGet Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.