For any software company, reducing logs helps to save money. We also know precisely how painful it is to have a production problem or even an incident only to find that we haven’t logged nearly enough. There are several different strategies to try to balance these two conflicting goals, including configuration to control log levels and sampling. In this post, we will discuss how feature flags can help you improve your logging strategy. As a result, you can update variables without pushing a configuration change, allowing for faster modifications in a crisis.

First, whether or not you use feature flags, we recommend wrapping your logging in an internal library. This has a few advantages. It allows you to keep a consistent format across your logs. Instead of relying on each developer to formulate their own logs, you can have them specify a few parameters and format the rest for them. Additionally, it allows you to automatically fill in fields you want everywhere, such as trace_id or user_id (or whatever applies to your application). Finally, it gives you a single location to add a feature flag.

Now that we have a feature flag for our logs, how does that help? We will set it up to use that feature flag to control sampling rate and log level per class. There are a few ways to do this, and we’ll follow up with another post about how we actually did this for our own logs. For this post, though, we will explain one of the other options.

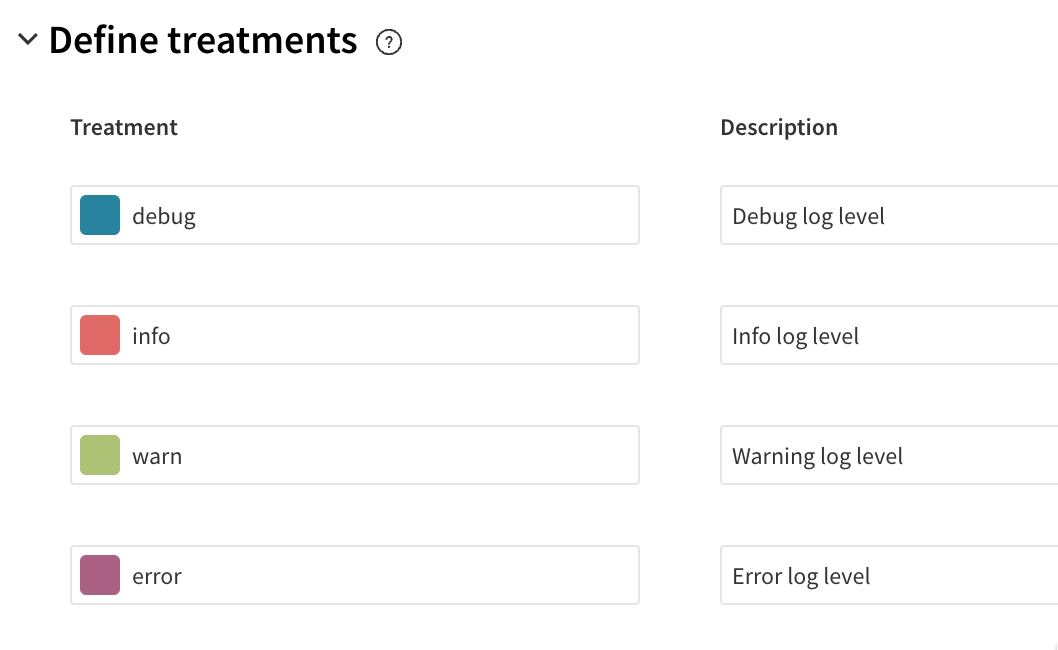

At a high level, we will set up a default logging level with the ability to override this—at the class level. To do this, we’ll start by creating a treatment for each log level.

Define Treatments

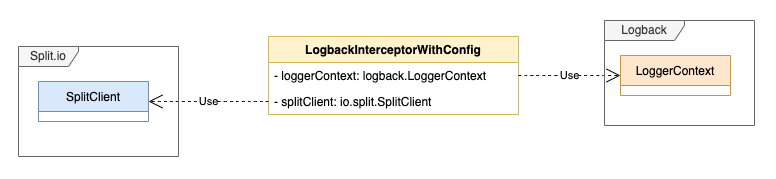

Once we have created the Split with the log levels, we need to create a Logback Interceptor class. This will fetch the Split changes periodically and sets up the right level to the ROOT logger in runtime. The next class diagrams illustrate the idea:

And the next code snippet implements the Logback Interceptor:

package my.awesome.app.log;

import ch.qos.logback.classic.Logger;

import ch.qos.logback.classic.LoggerContext;

import io.split.client.SplitClient;

import org.slf4j.event.Level;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class LogbackInterceptor {

private static final LoggerContext loggerContext = (LoggerContext) org.slf4j.LoggerFactory.getILoggerFactory();

private static final ScheduledExecutorService executor = Executors.newSingleThreadScheduledExecutor();

private static Level currentLevel = Level.INFO;

private static SplitClient splitClient;

private static String splitName;

private static void setLogbackLevel(String name, String level) {

Logger logger = loggerContext.exists(name);

if (logger != null) {

logger.setLevel(ch.qos.logback.classic.Level.toLevel(level));

}

}

private static void setupLogLevel() {

if (splitClient == null || splitName == null) {

return;

}

String level = splitClient.getTreatment("<GLOBAL>", splitName);

if ("control".equals(level)) {

return;

}

try {

currentLevel = Level.valueOf(level.toUpperCase());

} catch (Exception e) {

currentLevel = Level.INFO;

}

// Setting up Logback ROOT level

setLogbackLevel(Logger.ROOT_LOGGER_NAME, currentLevel.name());

}

public static void init(SplitClient splitClient, String splitName) {

LogbackInterceptor.splitClient = splitClient;

LogbackInterceptor.splitName = splitName;

executor.scheduleAtFixedRate(LogbackInterceptor::setupLogLevel, 0, 5, TimeUnit.SECONDS);

Runtime.getRuntime().addShutdownHook(new Thread(executor::shutdown));

}

}Get It Running

To get it running, add a single call to the static method init() injecting the SplitClient (see how to setup Split SDK here) and the Split name:

package my.awesome.app.log;

import io.split.client.SplitClient;

public class DemoLogApplication {

private static void setupLogger() {

String splitName = "logger-runtime-levels";

try {

String apiKey = "your-split-api key";

SplitFactory splitFactory = SplitFactoryBuilder.build(apiKey, config);

SplitClient client = splitFactory.client();

try {

LogbackInterceptor.init(client, splitName);

} catch (TimeoutException | InterruptedException e) {

e.printStackTrace();

}

} catch (Exception e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

setupLogger();

// Run your program

}

}So, with this simple code you can handle runtime log levels without stopping your program execution.

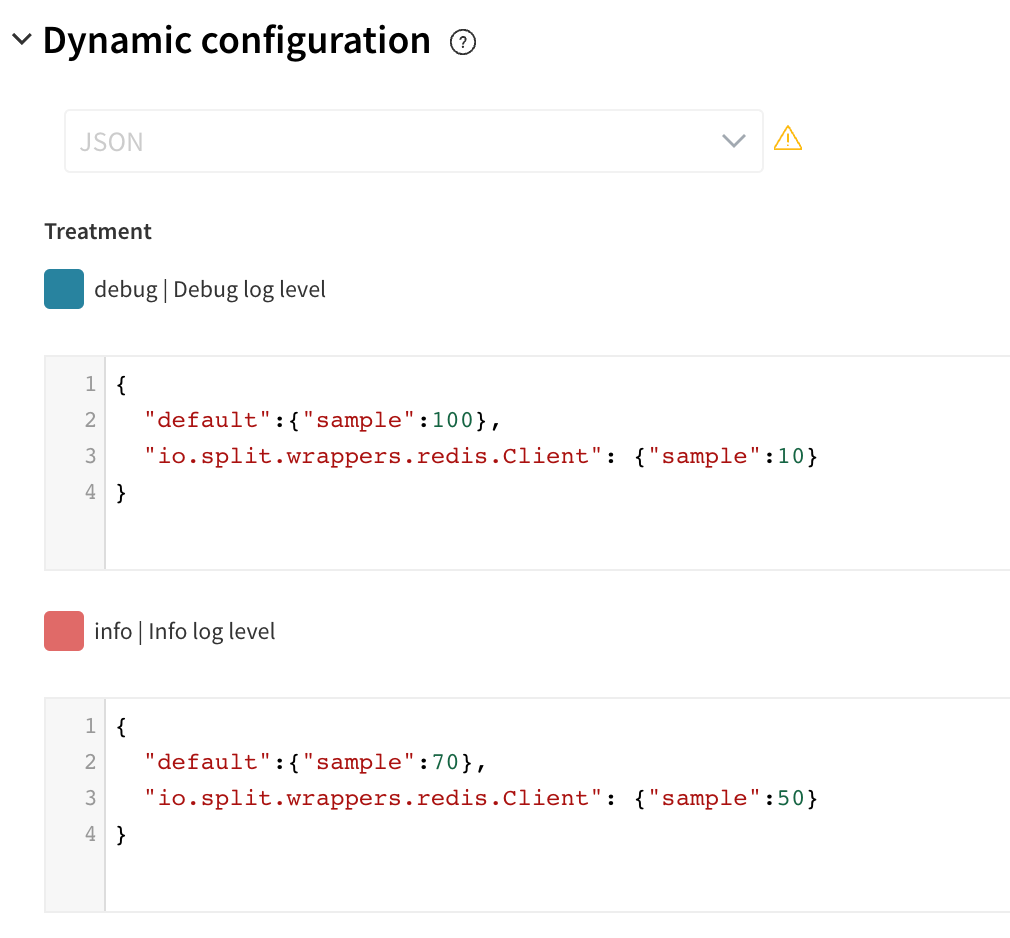

Taking this further, we can add a little more complexity to handle not only the log level but to also control the number of logs by sampling. To do this, we need to create a Logback appender and use the Split feature known as Dynamic Configuration:

The first step is to configure the Split with the desired configuration approach; you can use key-value pairs or a custom JSON.

Dynamic Configuration

In this example, we are setting up a custom JSON value to have more flexibility in our configuration:

Once we have set our dynamic configuration per treatment, we can write our code.

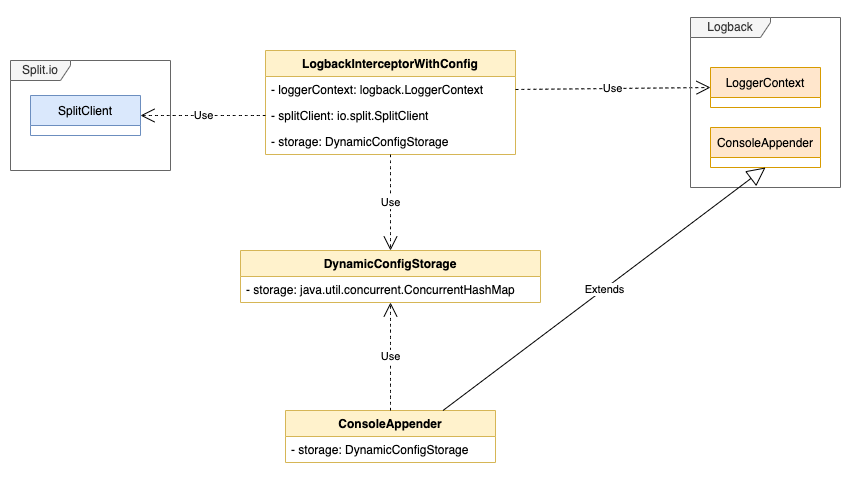

In this case, we will create a concurrent storage class to share the dynamic configuration across our LogbackInterceptor class. The LogbackInterceptor will fetch data from Split and write the configuration values into storage. The Logback appender will be reading from the storage when sampling log lines.

The next diagram illustrates this approach:

So, following the previous diagram the code of each class will be:

// LogbackInterceptorWithConfig.class

package my.awesome.app.log;

import ch.qos.logback.classic.Logger;

import ch.qos.logback.classic.LoggerContext;

import com.google.gson.Gson;

import com.google.gson.internal.LinkedTreeMap;

import io.split.client.SplitClient;

import io.split.client.api.SplitResult;

import org.slf4j.event.Level;

import java.util.Map;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class LogbackInterceptorWithConfig {

private static final LoggerContext loggerContext = (LoggerContext) org.slf4j.LoggerFactory.getILoggerFactory();

private static final ScheduledExecutorService executor = Executors.newSingleThreadScheduledExecutor();

private static Level currentLevel = Level.INFO;

private static SplitClient splitClient;

private static String splitName;

private static final Gson gson = new Gson();

private static void setLogbackLevel(String name, String level) {

Logger logger = loggerContext.exists(name);

if (logger != null) {

logger.setLevel(ch.qos.logback.classic.Level.toLevel(level));

}

}

private static void setupLogLevel() {

if (splitClient == null || splitName == null) {

return;

}

SplitResult result = splitClient.getTreatmentWithConfig("<GLOBAL>", splitName);

String level = result.treatment();

if ("control".equals(level)) {

return;

}

try {

currentLevel = Level.valueOf(level.toUpperCase());

} catch (Exception e) {

currentLevel = Level.INFO;

}

// Setting up Logback ROOT level

setLogbackLevel(Logger.ROOT_LOGGER_NAME, currentLevel.name());

// Setting up the dynamic configurations

try {

setupLogConfigs(result.config());

} catch (Exception e) {

System.out.println(String.format("#EXCEPTION LogbackInterceptorWithConfig::setupLogLevel %s\n", e.getMessage()));

}

}

private static void setupLogConfigs(String jsonConfig) {

Map<String, LinkedTreeMap<String, Object>> confMap = gson.fromJson(jsonConfig, Map.class);

// reset storage to update it with new configurations

DynamicConfigStorage.clear();

// setting up dynamic configurations per logger name

confMap.forEach((loggerName, conf) -> {

int sample = ((Double) conf.getOrDefault("sample", 100)).intValue();

DynamicConfigStorage.putSample(loggerName, sample);

});

}

public static void init(SplitClient splitClient, String splitName) {

LogbackInterceptorWithConfig.splitClient = splitClient;

LogbackInterceptorWithConfig.splitName = splitName;

executor.scheduleAtFixedRate(LogbackInterceptorWithConfig::setupLogLevel, 0, 5, TimeUnit.SECONDS);

Runtime.getRuntime().addShutdownHook(new Thread(executor::shutdown));

}

}// DynamicConfigStorage.class

package my.awesome.app.log;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

import java.util.function.BiConsumer;

public class DynamicConfigStorage {

private static final Map<String, Integer> configs = new ConcurrentHashMap<>();

private static final String DEFAULT_CONFIG = "default";

public static void clear() {

configs.clear();

}

public static int size() {

return configs.size();

}

public static void putSample(String key, Integer sample) {

configs.put(key, sample);

}

public static boolean isEmpty() {

return configs.isEmpty();

}

public static boolean containsKey(String key) {

return configs.containsKey(key);

}

public static Integer getSample(String key) {

return configs.get(key);

}

public static Integer getDefaultSample() {

if (configs.containsKey(DEFAULT_CONFIG)) {

return configs.get(DEFAULT_CONFIG);

}

return 100;

}

public static void forEach(BiConsumer <String, Integer> action) {

configs.forEach(action);

}

}// ConsoleAppender.class

package my.awesome.app.log;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

import java.util.function.BiConsumer;

package my.awesome.app.log;

import ch.qos.logback.classic.spi.LoggingEvent;

import java.util.Random;

public class ConsoleAppender<E> extends ch.qos.logback.core.ConsoleAppender<E> {

protected final Random random = new Random();

@Override

protected void append(E eventObject) {

if (!isStarted()) {

return;

}

if (eventObject instanceof LoggingEvent) {

String loggerName = ((LoggingEvent) eventObject).getLoggerName();

int sample;

if (DynamicConfigStorage.containsKey(loggerName)) {

sample = DynamicConfigStorage.getSample(loggerName);

} else {

sample = DynamicConfigStorage.getDefaultSample();

}

if (!shouldLog(sample)) {

return;

}

}

subAppend(eventObject);

}

private boolean shouldLog(int sample) {

if (sample <= 0) {

return false;

} else if (sample >= 100) {

return true;

}

int value = this.random.nextInt(100);

return value <= sample;

}

}/**

* As you can see, we have chosen a JSON object with the following format:

* "{ <logger-name>: { <logger-attribute>:<value> } }" This format will allow

* more flexibility and new features in the future can be added. For example,

* if we would like to replace the log level by logger, that could be possible

* by adding the attribute "level" and making a small change in our logic to

* the LogbackInterceptorWithConfig.class:

*/

private static void setupLogConfigs(String jsonConfig) {

Map<String, LinkedTreeMap<String, Object>> confMap = gson.fromJson(jsonConfig, Map.class);

// reset storage to update it with new configurations

DynamicConfigStorage.clear();

// setting up dynamic configurations per logger name

confMap.forEach((loggerName, conf) -> {

int sample = ((Double) conf.getOrDefault("sample", 100)).intValue();

DynamicConfigStorage.putSample(loggerName, sample);

String lvl = (String) conf.getOrDefault("level", "info");

setLogbackLevel(loggerName, lvl);

});

}Now you can see how feature flags, and especially Split, can help improve your logging. We allow you to log less with the piece of mind that you can quickly and easily increase logs if something happens. And we can do all of this without pushing a code or configuration change and without littering the code with separate feature flags just waiting to be flipped in case of emergency.

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

Split gives product development teams the confidence to release features that matter faster. It’s the only feature management and experimentation solution that automatically attributes data-driven insight to every feature that’s released—all while enabling astoundingly easy deployment, profound risk reduction, and better visibility across teams. Split offers more than a platform: It offers partnership. By sticking with customers every step of the way, Split illuminates the path toward continuous improvement and timely innovation. Switch on a trial account, schedule a demo, or contact us for further questions.